This important point seems obvious in retrospect:

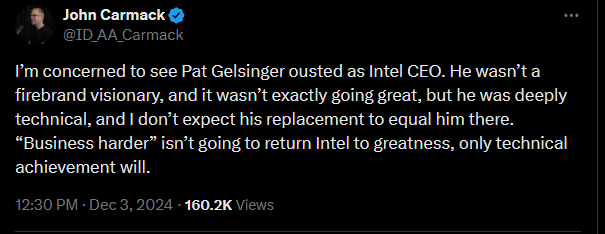

In addition to the CEO, the Board of Directors of enterprises whose core products are engineered, must have enough of an engineering background to make a clear-eyed assessment of the best path forward.

Usually, the importance of having technologists run technology companies finds expression in the form of CEO selection. Microsoft suffered a lost decade during Steve Ballmer’s tenure as CEO. The story may be apocryphal, but Microsoft lore held that Ballmer’s signature accomplishment at Procter & Gamble had been to design packaging that literally crowded competitors out of store shelves. In any case, he was a marketer, not a technologist, and Microsoft found its footing again after appointing Satya Nadella to replace him as CEO.

Boeing lost its way under CEOs whose backgrounds favored finance over engineering, with Jim “Prince Jim” McNerney referring to longtime engineers and skilled machinists as “phenomenally talented assholes” and encouraging their ouster from the company. The disastrous performances of the 787 and 737 MAX are widely attributed to Boeing’s embrace of financial as opposed to aeronautical engineering.

For Intel’s part, the beginning of the end appears to date back to the CEOs appointed after Paul Otellini (May 2005-May 2013): Brian Krzanich (May 2013-June 2018) and especially ex-CFO Bob Swan (June 2018-January 2021) recorded tenures marred by acquisitions of dubious merit, blunders in product development, and a loss of Intel’s historic lead in semiconductor fabrication.

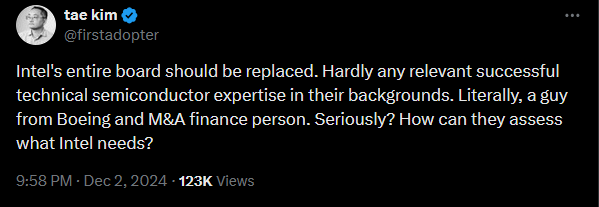

The commentary around Gelsinger’s ouster quickly coalesced into two camps, as summarized by Dr. Ian Cutress:

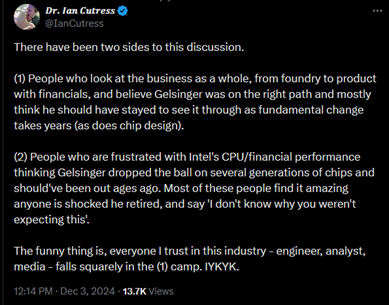

John Carmack made his presence known in the (1) camp with this tweet:

When Intel first announced that Pat Gelsinger would return as CEO, I was surprised to hear that he even wanted the role. Intel had been foundering for years, and Gelsinger was in a position to know just how deep a hole they’d dug for themselves. He tried, and apparently failed, to set expectations to the public and to the board as to what a long and difficult road lay ahead. Breaking apart the CPU product development and chip fabrication, as AMD did with Global Foundries almost 15 years ago, was the right thing to do. Lobbying for the enactment of CHIPS, and soliciting federal subsidies to re-shore semiconductor manufacturing, also was the right thing to do. It was going to take a long time, and some serious politicking: on the one hand, layoffs seemed inevitable and necessary; on the other, Members of Congress being asked to support a chipmaker with billions of taxpayer dollars don’t want to hear about the need for layoffs.

It turned out to be too difficult an optimization problem to solve in the time allotted. To many, it doesn’t seem reasonable for Intel’s Board to have expected a turnaround in the time Gelsinger had at the helm, and it is disqualifying for them to oust him without a succession plan.

I am no fan of Intel. In its heyday, Intel indulged in anticompetitive practices that put to shame anything Microsoft attempted in the 1990s, and never got the regulatory scrutiny it deserved. Before TransMeta, there was Intergraph. AMD and NVIDIA eventually prevailed in antitrust settlements, and it is genuinely shocking to intellectualize that as recently as 2016, Intel was paying $300M per quarter to NVIDIA as part of a $1.5B private antitrust settlement. How quickly the mighty have fallen!

At the same time, Intel’s steadfast commitment to executing on its core technical roadmap – improving the x86 architecture, without breaking backward compatibility – enabled them to bring volume business models to positively disrupt not only the PC industry (1980s), but the workstation industry (1990s) and HPC and data centers. They democratized computing in a way that few other companies can claim. For that, the company deserves our gratitude and respect, and we all should be pulling for its turnaround and a brighter future.

Unfortunately for Intel and the board, it is not at all clear that ousting Pat Gelsinger will lead to those favorable outcomes.